What is a data pipeline?

A data pipeline is a series of steps that are performed on data. These steps can include transforming, cleansing, aggregating, and cleaning the data to make it suitable for analysis or modeling purposes.

Different types of pipelines

-

Simple dataset

This type usually involves simple fields such as names, addresses, and phone numbers which do not require any cleansing before they can be used in reporting/analysis.

-

Intermediate dataset

It includes more complex datasets which often contain multiple tables with many different types of attributes within each table (i.e., name, address, email, etc.). These datasets may also have various dependencies where one attribute needs another field from a different table to populate correctly.

-

Complex dataset

This type includes datasets with millions of records which often require a variety of processing steps before they can be used for analysis or reporting purposes (i.e., feature extraction, data quality checks, and so on).

Balancing IT users and data analysts with governed analytics and discovery

Benefits of using a data pipeline

-

Processing speeds

A data pipeline allows the data to be processed in a much more efficient manner as it eliminates the need to wait till all the steps are completed before analysis.

-

Security

With everything being handled within one platform instead of various users working on different pieces and then trying to combine them, data security is greatly improved.

-

Access control

Each user person can view only datasets/tasks they are assigned to, which helps reduce the risk of sensitive information being released to unauthorized personnel.

-

Collaboration

Data pipeline allows multiple users to work on different pieces without having any overlap or conflict with each other. This saves time and makes it easy for users to interpret what someone else has already done before starting their analysis.

-

Overall efficiency and agile approach

Data pipeline allows teams within an organization to work together more efficiently by allowing them to share resources such as code libraries, reusable components (i.e., Part-of-Speech tagging), and datasets in real-time. Users save a huge amount of time that would have been spent creating the pipeline. It’s very easy to use, and you don't have to worry about mistakes that might be made when building your own.

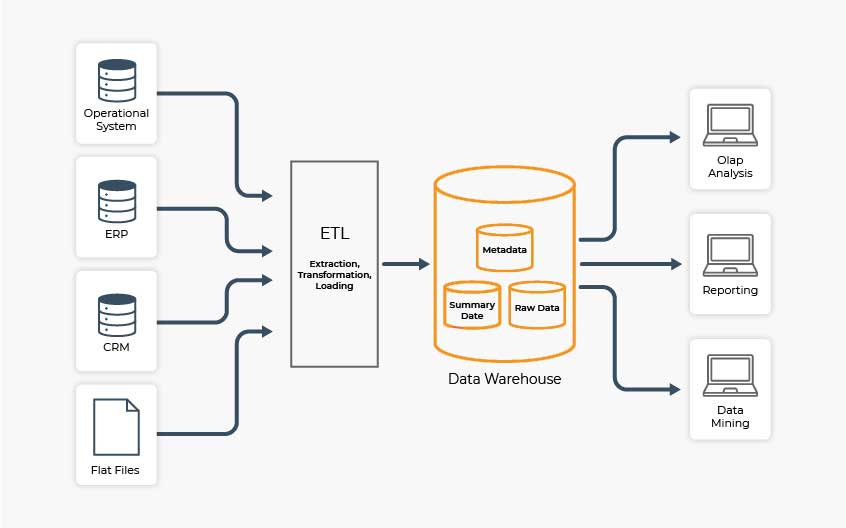

Data pipeline components

Data pipelines can be composed of various types of components which have their technical requirements and challenges to overcome when being implemented. A general structure for a typical data pipeline might look something like this:

Data pipeline tools and infrastructure

A data pipeline is a set of tools and components that help you to effectively manage datasets and turn them into actionable insights. The ultimate goal is to use the information found within these datasets to make better decisions about business direction. A successful data pipeline can be divided up into nine main parts:

How Lyftrondata helps to transform your Snowflake journey

Lyftrondata columnar ANSI SQL pipeline for instant data access

Many leading companies have invested millions of dollars building data pipelines manually but unfortunately were unable to reap the ROI. The result has mostly been a complex data-driven ecosystem that requires a lot of people, time, and money to maintain.

Lyftrondata removes all such distractions with the Columnar SQL data pipeline that supplies businesses with a steady stream of integrated, consistent data for exploration, analysis, and decision making. Users can access all the data from different regions in a data hub instantly and migrate from legacy databases to a modern data warehouse without worrying about coding data pipelines manually.

Lyftrondata’s columnar pipeline unifies all data sources to a single format and loads the data to a target data warehouse for the use of analytics and BI tools. Avoid re-inventing the wheel of building pipelines manually, use Lyftrondata’s automated pipeline to make the right data available at the right time.

How it works

Lyftrondata’s columnar pipeline allows users to process and load events from multiple sources to target data warehouses via simple commands. All data pipelines in Lyftrondata are defined in SQL. This concept enables scripting all data pipelines, and therefore there’s no need to build them manually. Data pipelines could be automatically scripted instead of building them manually in a visual designer. Get ensured that you can sync and access your real-time data in sub-seconds using any BI tool you like.

Know the heart and soul of data pipeline with Lyftrondata

-

Source

There is nothing easier than connecting your data from different sources to one source and avoid complicated tasks of preparing data and setting up complex ETL processes. This layer ensures a hassle-free process of connecting with source connectors.

-

Virtualization

This layer lies just below “Source” and manages the unified data for centralized security. It provides a common abstraction over any data source type, shielding users from its complexity and back-end technologies it operates on.

-

Caching

This third layer is used to cache data used by SQL queries. Whenever data is needed for a given query it's retrieved from here, and cached in SSD and memory.

-

Metadata

Under the covers of the caching layer, Lyftrondata possesses metadata that works to improve the overall performance capacity. This layer takes care of scheduler, alerts, workflow, data catalog, logs, monitoring, execution plan and more

-

Security

Considered as the heart of Lyftrondata, this layer handles one of the most crucial functions of enforcing security and encrypts key management. This functions for encryption, tagging, masking, access rights and role management.

-

Visualization

This innermost layer allows to analyze, visualize and explore the massive volume of data from disparate data sources, empowering users to drive real-time insights for business decisions.